What makes VisAI optimized?

There are a few design choices that allow VisAI to be optimized.

At its core, all The Behaviour Foundation needs to calculate decisions are the input and output scores. This means that at the core, all decisions are based on float comparisons, which is very performance friendly. You can realistically manage hundreds of AI with minor optimizations, and even more with better optimization.

If you own the framework, check out the Woodcutter example. It’s very minimally optimized with some of the strategies used here, and the example map spawns 250 AI easily on modern systems.

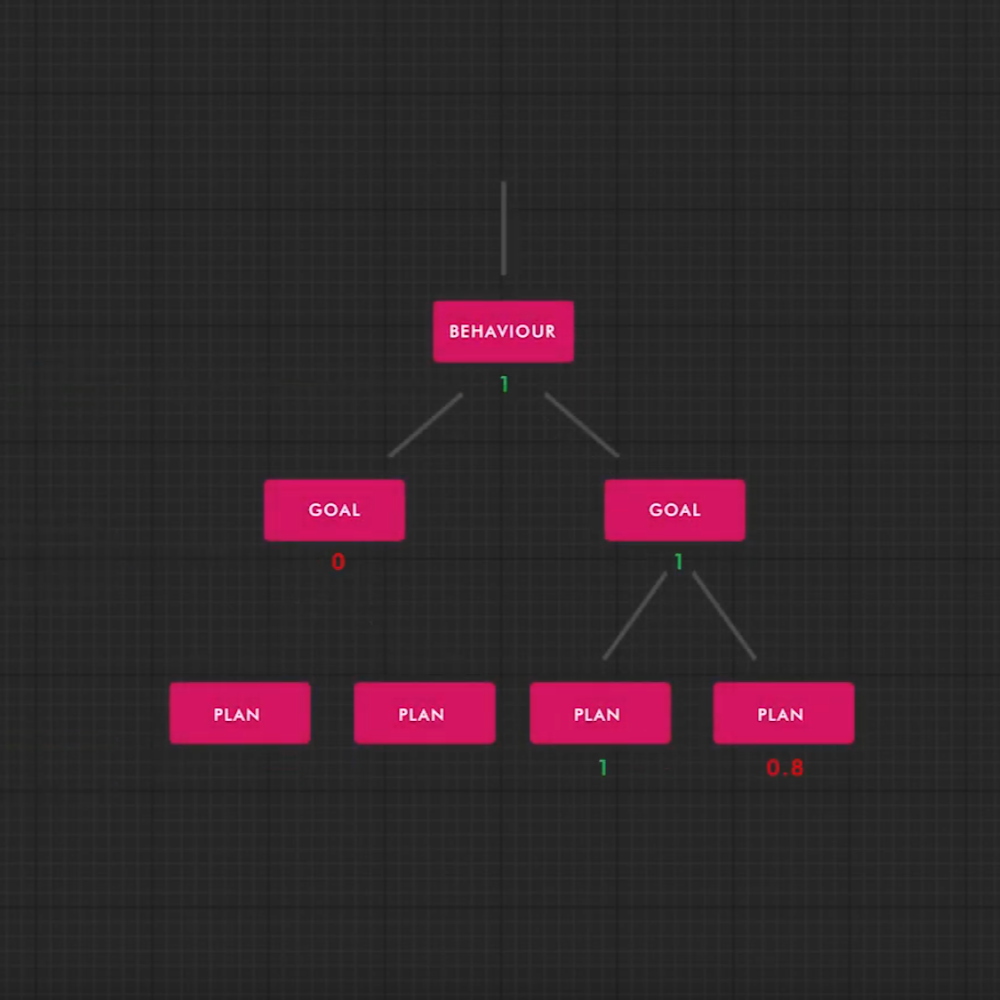

VisAI’s hierarchy also only calculates what is necessary in order to function. You can see this in action below:

On top of VisAI’s design theory itself being optimized, you’ll also find that all of the systems included with VisAI have been optimized as well. This is done by lots of pre-planning, consideration, and research into each specific systems’ needs.

In order to properly optimize your AI, you need to know how to read the performance of your project. If you’re not familiar with the tools Unreal has available, be sure to check out some of the links below before continuing. You’ll likely learn something new!

Videos

- Unreal Stream | Unreal Insights

- Unreal Stream | Collect, Analyze, and Visualize Your Data with Unreal Insights.

Documentation

- Unreal Documentation | Unreal Insights

- Unreal Documentation | Performance & Profiling

- Blog | Unreal Optimization

There are various ways that you can optimize your AI, and they mostly depend on what your desired AI will need to do. Look through the list of suggestions below to see if there are any you could apply to your AI.

(mostly) Static Decision Data

Lets say we have an AI that needs to pick up all the nearby trash. We’re creating the decision calculation.

If you’ve built with VisAI before, you might be tempted to do something like “Get all actors of Class –> Check array for trash references”. However, this would result in “Get all actors of Class”, a very expensive function, to be called everytime your AI needs to make a decision. This is undesireable.

Instead, what we could do is create a separate system (attached to the AI) that “listens” for new trashes (typically with Event Dispatchers or an Interface). The listener would then update a local array of trashes, which the AI could read from for decisions.

This drastically reduces the performance implications of the decision to check for trash. Instead of using “Get all Actors of Class”, it’s merely calculating the length of a local array.

Manual Decision Indicators

What if instead of leaving our decision calculation on a timer (the default), we called the Manual Decision Event everytime trash was placed on the ground? This would make it so the AI wouldn’t check unless there was an update to the amount of trash on the ground.

This allows us to make less decisions, with the assumption that we won’t be adding trash more quickly than a decision would normally be checked. The default is every 0.65 seconds.

You can use manual decision indicators by themselves by simply turning off auto decision scoring in the settings (Controller/Component). You can also use them in conjunction with auto scoring by adjusting how quickly behaviours/goals/plans are scored.

Note: This can drastically cut down performance in some scenarios, but may not be useful in others. For example, in an FPS type situation this seems like a good idea, but would likely generate high amounts of unnecessary updates in intense battle situations, thus making a timer a better solution.

Semi-Centralized Data Management

If you were using a higher number of AI, let’s say 1,000, you would might want to use a centralized management system to feed some of the data to your AI. This reduces the need for all the AI to think for themselves, as the management system provides a lot of the heavy lifting.

The management system would work very similar to how static decision data works. Instead of a local system (like we did with static decisions) we can use a “Manager class” and an Interface (Blueprint/C++) to delegate our task; “trash pickup” to the appropriate AI.

- The AI Decision System references a local variable, in this case, an array of “Trashes Queued”, to determine if it should pick up trash.

- The Manager Class looks for new trash (likely through event dispatches or another interface), and when new trash was found, it would pick the closest AI to the job & delegate the task. .

All of this reduces the amount of calls the AI uses to get data, and reduces the amount of data that’s being processed.

Adjusting Decision Settings

It’s best to fine tune your Decision settings in order to get the best performance & quality for your AI. Behaviours, Goals, and Plans are all scored seperately, on a timer. You can adjust the frequency of each and toggle them on/off through settings.

Turning Off Unused Features

It’s incredibly easy to turn off unused features with VisAI. Simply navigate to the AI controller you’re using, remove any components you’re not using, or turn the system off in it’s settings. Don’t hesitate to reach out for support if you get stuck.

Videos

Videos

Documentation

Courses

Documentation